(

This example is found in Kyma Sound Library / Effects Processing / Spectral Processing-Live.kym)

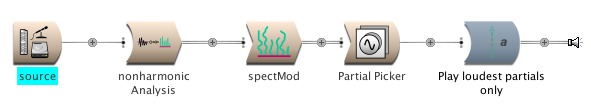

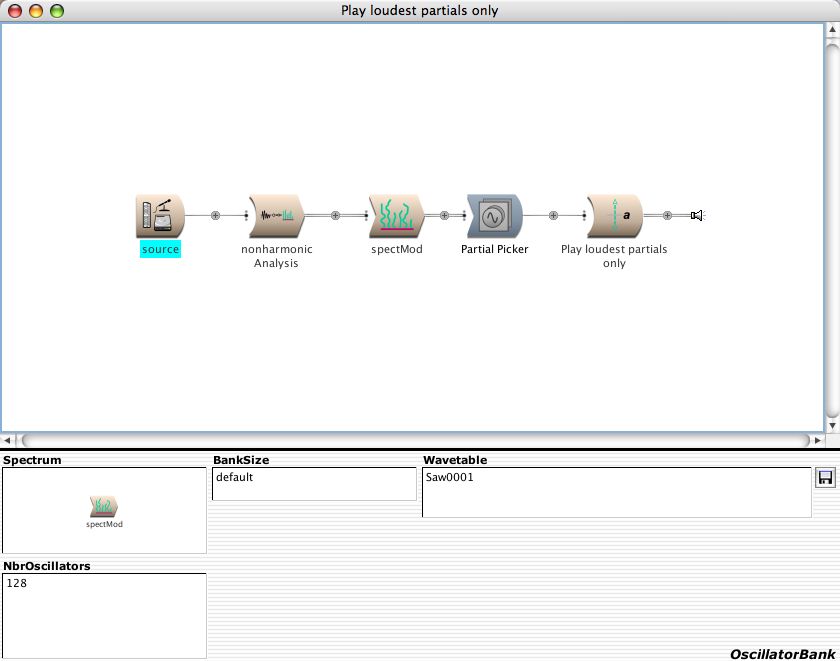

A sound source is analyzed by a

LiveSpectralAnalysis module to obtain its spectrum; the spectrum is modified in the

SpectrumModifier; the modified spectrum is resynthesized by an

OscillatorBank, and the result goes through a

Level module for amplification or attenuation.

Let's follow the signal from left to right as it passes through each module:

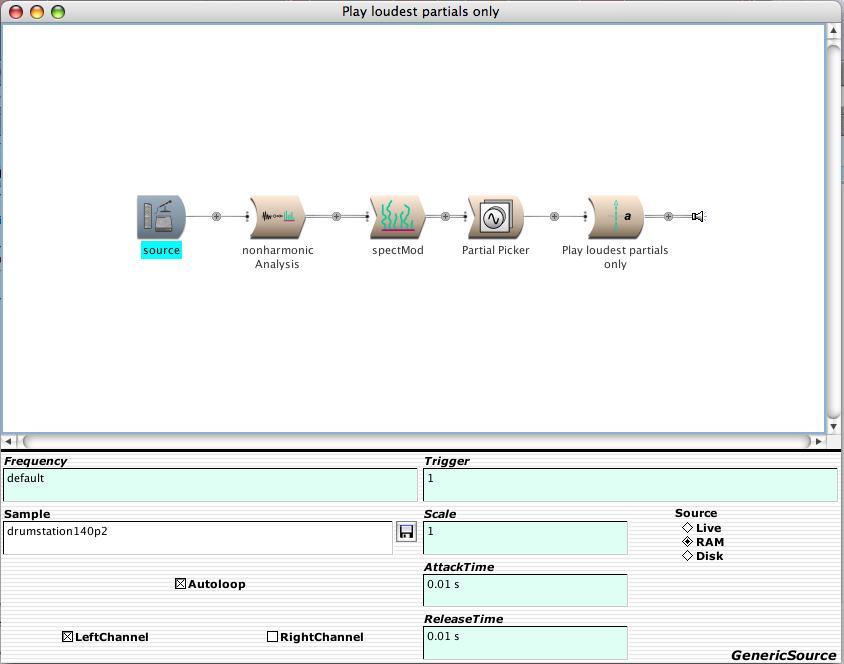

Source:

The source of audio input is a

GenericSource looping a drum loop sample out of RAM. The cyan highlighting on the name 'source' indicates that this is the replaceable input (this means that in the Sound Browser, you can substitute your own live microphone input or any other Sound for this Sound).

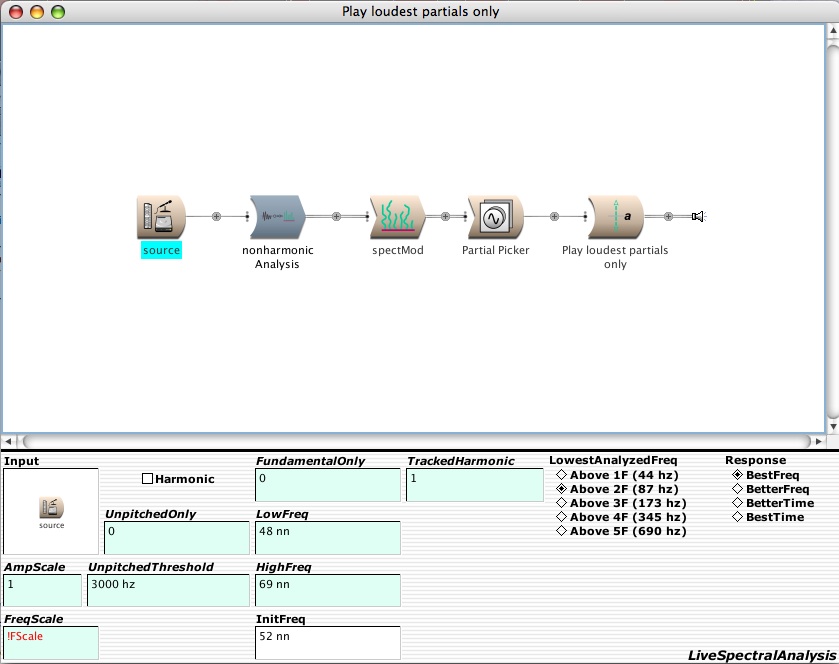

LiveSpectralAnalysis:

This module is analyzing the spectrum of the live input. Imagine that you have a graphic equalizer with 256 bands spaced 87 hz apart from each other. Now imagine that you could monitor the time-varying amplitude output of each individual band and use that as an amplitude envelope. Imagine you also have a way to track how the frequency in each band deviates from the center of that band and use that as a frequency envelope.

The output of the

LiveSpectralAnalysis is a collection of amplitude and frequency envelopes, one for each of the analysis bandpass filters. In this case, there are 256 pairs of envelopes. (If you had selected 44 hz as the lowest analyzed frequency, there would be 512 pairs of envelopes).

!FScale scales the frequencies of all the frequency envelopes up or down; each frequency is multiplied by the value of

!FScale.

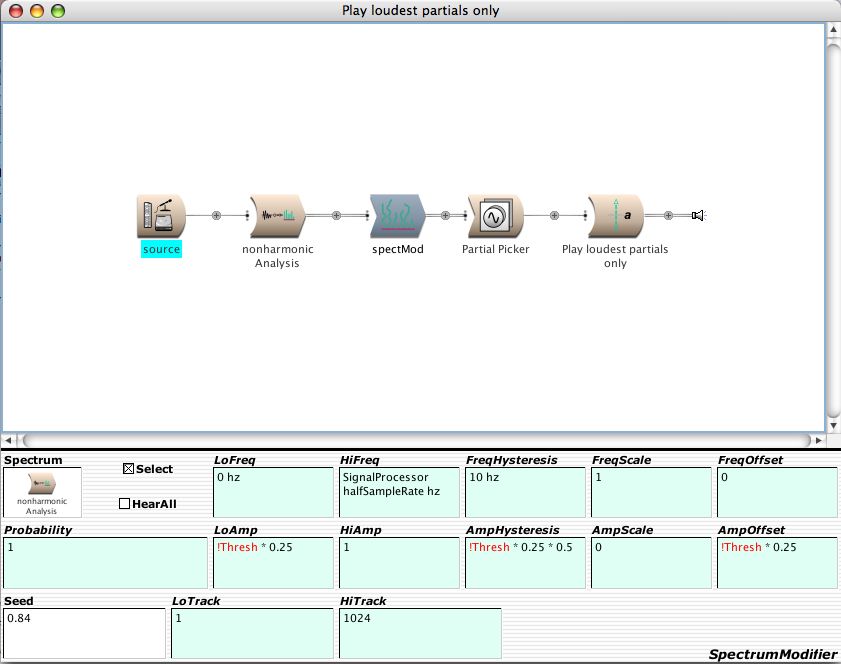

SpectrumModifier:

The

SpectrumModifier is selecting only

some of the amplitude/frequency envelope pairs based on some criteria. In the

SpectrumModifier, each amplitude/frequency envelope pair is called a

Track, since each of these envelope pairs would look like a horizontal "track" if you were to look at a spectrum file the Spectrum Editor.

In this case, the tracks are selected on the basis of amplitude. If an amplitude envelope drops below

!Thresh * 0.25, it is rejected. If it is higher than

!Thresh * 0.25, it is passed through to the output.

AmpHysteresis is used to give a little "inertia" to the amplitude selection process. This is to prevent tracks that are near the threshold from popping in and out on every frame. Hysteresis means that you tend to stick with your opinion until there is a very strong reason to switch. Once you have switched, it takes a strong change before you will give up and switch back.

An

AmpOffset is being added to each value of each amplitude envelope that has passed the selection test. So not only are the tracks below the threshold amplitude being rejected, but the remaining tracks are being boosted in amplitude. If the tracks are loud enough, this additive offset may even cause them to clip at 1. You won't hear this as an ugly audio clipping though, since it is the amplitude

envelope that is being clipped, not the signal itself.

PartialPicker:

The

OscillatorBank applies each frequency/amplitude envelope pair to a single oscillator in order to resynthesize the time domain signal from the (now modified) spectrum.

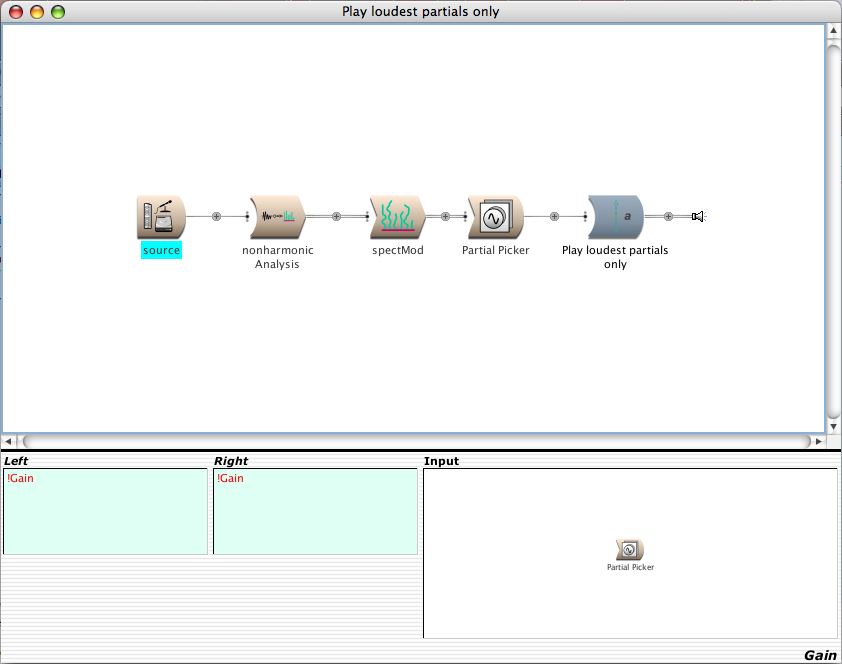

Level:

Here,

!Gain is used to boost or attenuate the final output.

Compare this Sound to Saw resynth & saturate in the same file.

This Sound has been deconstructed by -- CarlaScaletti - 22 Nov 2005

Maximum pitch deviation

How does pitch enveloppe following work?

Is ther a "track crossing" involved? Does the freq env "force" update on each frame or simply "idle" when no freq change occur?

I'm asking because I have this theorical limit situation in mind: The sound being analysed is made of 256 STEADY

sine waves; how the resynth process behaves if only track 256 (in this case the harmonic number 256) freq env goes very low?

(in a glissando way)

--

KarlMousseau - 22 Nov 2005

Think of the nonharmonic analysis as a bank of bandpass filters. If one of the partials changes in frequency in a glissando, it will leave its filter bands and cross over into the adjacent band. When this happens the amplitude envelope in that band fades out as the amplitude of the adjacent band fades in. If there happens to be another partial already in that frequency band, the two partials could interfere with one another (typically as "beats" since it would be the sum of two slightly detuned sine waves).

--

CarlaScaletti - 23 Nov 2005

kurt + carla, thanks for setting this up! the explanation came out fantastically. really helpful and useful information. thank you.

--

TaylorDeupree - 22 Nov 2005

Frame rate, Latency

Is the frame rate fixed or it changes relatively to the

Lowest Analysed Freq ? In other word,

Lowest Analysed Freq is there to optimize FFT lenght (latency) or it serves other goals?

--

KarlMousseau - 24 Nov 2005

The size of a frame is equal to the number of partials so yes, the frame rate is halved each time you halve the analysis frequency. The

Lowest Analyzed Freq is the lowest frequency that the analysis is looking for in your input signal (it is not an optimization parameter). So you should set that parameter as low as is

necessary (in order to catch the fundamental frequency of your input) but as high as you can get away with (in order to maximize the frame rate and minimize time smearing in the analysis).

--

CarlaScaletti - 25 Nov 2005